Apple’s Object Capture: An interesting evolution?

This year’s WWDC keynote included some new peeks into product photography.

Here at PhotoSpherix HQ, we’ve been a Mac shop the entire time – well over two decades. For us, Macs offer a useful (if not vital) toolset that enables most of our daily 360 product photography workflow. And along the way we’ve kept up as the technology has evolved.

Remember QuickTake cameras from the mid-late ’90s? Those were the first digitals cameras we used in college. Around that same time we began playing with panorama and object photography using QuickTime VR.

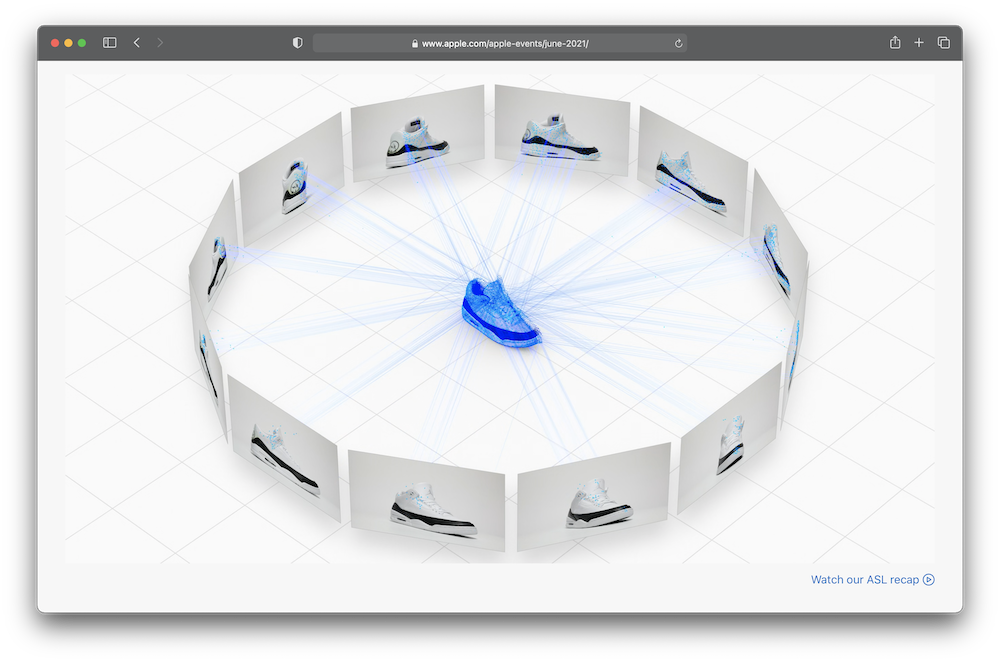

So, like a lot of Mac nerds – both hardcore developers and fair-weather fans alike – most of us tend to pay attention to the latest updates and peeks at what’s coming next. And this week’s keynote included some interesting tid-bits, around the 1:35 mark: Object Capture.

In a nutshell, Object Capture is an API on MacOS that uses photogrammetry that uses 2D static images to turn an object into a photorealistic 3D virtual representation. Sound familiar?

WWDC Object Capture

It’s still early, so we’ll keep an eye the Developer sessions, the early builds of the next MacOS (called Monterey), and the latest APIs in the Swift coding language. Does this kind of imagery capturing benefit our clients? Stay tuned and we’ll follow up in a few weeks with a deeper drive. Have a take? Drop us a line below.